Sean James.

If you are like me, we are fixated on making sure we are ready for the future. Many of us focus on anticipating future business needs while managing current challenges. For us, success means to balance solving today’s problems with preparing for what’s ahead. As my mentor said, “First be a problem expert, then you can be a solution expert.” This piece aims to discuss upcoming needs, identifying new problems and the innovative solutions required to address them, from chip to grid.

What is driving next generation problems

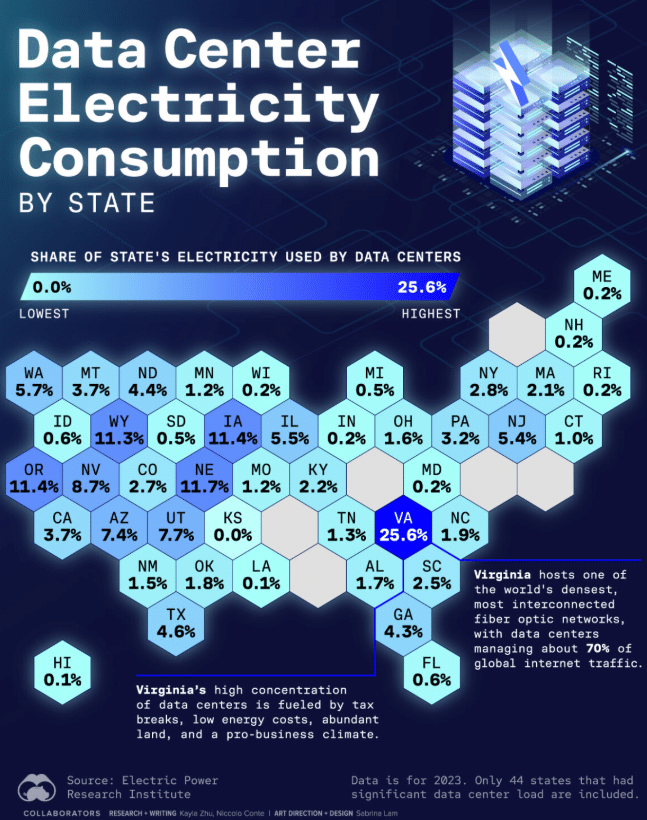

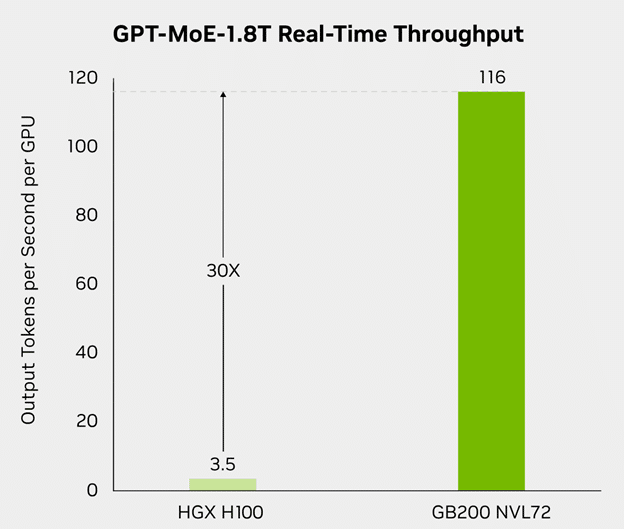

“We won’t need to worry about high density because Moore’s Law has ended.” The ever-shrinking transistor was starting to bump up against the physical limits of matter. Innovations in chip design increased speed while keeping them energy efficient but for the first time since the 60s, that trend appeared to be coming to an end. For years we were told to prepare for ultra-hot 50 kW-plus racks that never materialized at hyperscale, which is why air cooling remains the dominant approach in many datacenters. After all, air is cheap, simple, and good enough for low-density racks.

What actually ended was Dennard Scaling, the rule that let chips get faster without getting hotter by lowering voltage as the transistors inside them shrank. When that broke and raw single-thread speed gains slowed, the industry shifted to multicore, wider vectors, smarter memory systems, and specialized accelerators like GPUs. Meanwhile, AI moved from niche to mainstream. Rack density didn’t stall; it accelerated. We have gone from under 30 kW per rack in 2018 to hundreds of kW per rack. Air is no longer cheap, simple, and good enough.

Image curtesy of Techovedas.com

Power Distribution

Getting energy into the datacenter is just the start. A datacenter electrical plant must power racks reliably and safely. Power architectures that worked at lower densities fall short as rack power doubles and triples with each server generation. We need commercialization and scale-up of new power-distribution technology, so capacity is built dense, efficient, and serviceable.

Backup power must evolve. The defining feature of a datacenter is the ability to ride through brownouts and blackouts. Diesel generatorsremain common today due to energy density and fuel availability, but sustained growth demands low- or zero-emission standby generation or long-duration energy storage (LDES) that can provide 8–24 hours of ride-through at campus scale, coordinate at millisecond speeds with server power supplies and uninterruptible power systems (UPS) and meet stringent local emissions requirements. Tp accelerate some of the new tech we read about, we need scaled up, multi-site pilots with open results and clear cost targets to drive learning curves and de-risk procurement.

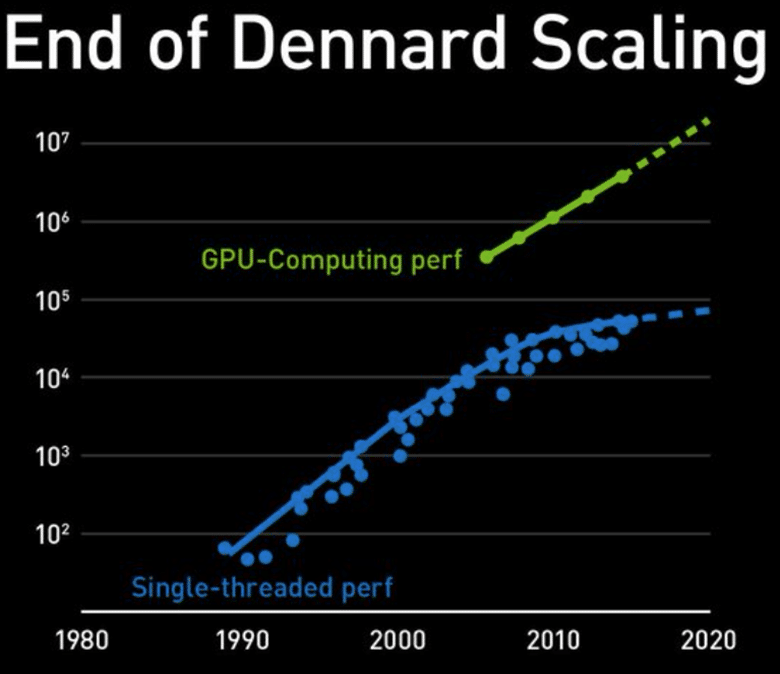

Grid-friendly embedded power electronics are next. Over the last two years, interactions at the chip and rack level have shown up at the grid edge, especially during AI training. When thousands of accelerators start and stop together, power can ramp faster than the grid can follow, causing voltage dips on surges and waste on drop-off. We need rack and power-shelf controls that limit ramp rates, buffer short spikes with onboard energy storage (for example, supercapacitors), and taper ramp-down. NVIDIA’s GB300 NVL72 demonstrates this direction; reported tests show material reductions in peak demand. The next step is engineering embedded power electronics that talk and coordinate with electrical equipment in the datacenter and the grid.

The power smoothing feature in the NVL72

Cooling

With power comes cooling. Keeping power dense GPU racks thermally stable comes with its own set of nascent problems at a time when our industry is trying to reduce water consumption. For over a decade, datacenters have used outside air with evaporative cooling to keep low density servers cool, but today’s servers produce so much heat, direct water cooling is the only practical way. Cooling water requires more mechanical cooling equipment like chillers which drive up energy usage, footprint, and cost.

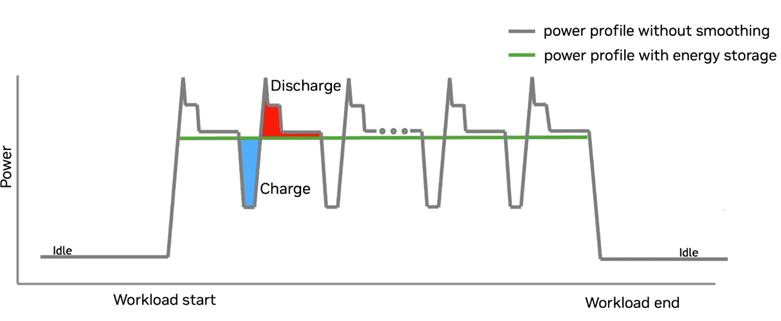

The latest offering from NVIDIA (GB200) is 30 times more powerful than the last generation (H100) and draws almost twice the power and pumps out twice the heat.

Datacenters need new efficient cooling technology for today’s energy dense compute racks that work in a variety of climates around the world. These systems need to be energy efficient and non-toxic and consume no water. Technologies that allow chips to be cooled sufficiently at higher temperatures will eliminate the need for chillers and water consumption. Warmer water supply temperatures allow ambient air to sufficiently lower the temperature of the water loop using outdoor heat exchangers, such as dry coolers.

Two phase liquids are a prime opportunity. When any liquid is boiled, it removes a lot of heat energy from the surface that is heating it, making it a very effective means of cooling. Two phase cooling can be used on cold plates which channel liquid pumped to it to hot components within the server like GPUs. This leaves a lot of other components in the server that need cooling with air flow from the datacenter, but those components are increasing in density as well (e.g. high bandwidth memory). Two phase liquids can also be used in an immersion tank system where the entire server is immersed into a vat of special fluid with dialectic properties (liquid that doesn’t go Bzzt when introduced to electricity), cooling everything in the server chassis. The vapor that boils to the top is recondensed at the top and rains down for more cooling. In order to help this scale, we need two key innovations; a complete overhaul of the classic server case architecture to reduce internal void space and non-toxic, environmentally friendly liquid that boils at the specified temperature.

Lastly on the cooling topic, we need more fungibility in cooling systems. Cooling fungibility is strategic insurance for AI-era datacenters: design once, support any mix of server types without costly rework. It hedges demand uncertainty (GPU vs. lower-density compute) so megawatts don’t sit stranded, speeds time-to-compute (weeks, not months, to densify), and lets you stage capex to actual hardware arrivals. Multi-vendor interchangeability reduces supply-chain delays and spare-parts risk, while standard procedures simplify operations, improve safety, and enable future automation. Energy and sustainability improve because you can run in the most efficient mode for the season and site, minimize water and refrigerant use, and add heat-reuse when it pencils. Regulatory and site risks drop since the same repeatable design can meet local and future codes without a redesign. Financially, this raises occupancy and residual value (tenant-agnostic halls), lowers lifecycle cost, and makes assets more “bankable.” Less complexity, more common cooling systems.

Energy

Access to affordable and reliable energy is essential for human advancement. After a period of relative stagnation, demand has surged, but the traditional energy market has not evolved at the same pace. Their challenge is to decarbonize while simultaneously building new supply to meet the growing needs of manufacturing, electric vehicles, and datacenters. To meet these demands, the energy market must evolve and modernize quickly.

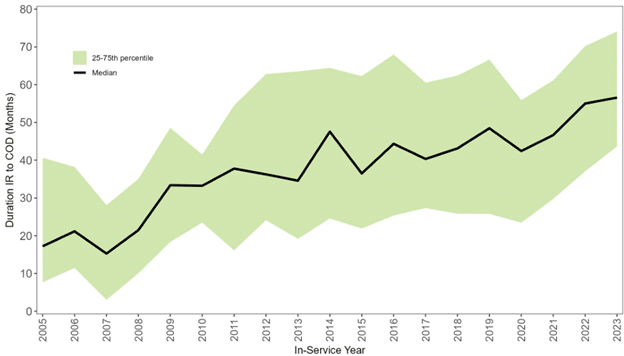

Interconnection queues, where new energy sources, storage, or large loads wait to connect, now commonly run multi-year and in many regions approach five years at the median. These delays affect both datacenters and power-plant developers. Some markets have adjusted processes for large loads (for example, ERCOT’s large-load interconnection process). These kinds of programs formalize a new “hook us up faster, we’ll be flexible” deal. More needs to happen to meet current and coming needs.

According to Lawrence Berkeley National Laboratory’s Queued Up: 2024 Edition, the median time from request to commercial operations rose from under 2 years in 2008 to about 5 years.

New energy technology must be fast-tracked because the old commercialization playbook takes too long. For example, lithium-ion batteries took seven decades to move from laboratory to today’s maturity. We need a playbook that cuts that to under a decade. The go-to solution today is natural gas where supply and infrastructure permit. The latest example is Ohio regulators approving a 200 MW gas plant for a hyperscale datacenter. Carbon-capture tech can reduce emissions and retrofits are emerging, but progress must accelerate.

There is no silver bullet. We will need a portfolio: carbon-free sources and cleaner fossil solutions. Renewables are vital, but we also need firm, dispatchable capacity, for example, batteries paired with renewables at longer durations or advanced nuclear designs. Google’s recent agreement to purchase 200 MW from Commonwealth Fusion Systems future ARC fusion plant reflects a willingness to underwrite innovation. Small modular reactors (SMRs) and fusion each face their own regulatory paths, and even after approvals, scaling will take time. A mix of solutions will be required.

Datacenter flexibility is another emerging tool. A Duke University study indicates the U.S. grid is under-utilized on average and that datacenters willing to shape about one percent of load under clear rules could unlock hundreds of gigawatts of near-term capacity. Because this tool is new, datacenter developers need incentives to take risks and modernizedinterconnection rules to ensure a fair exchange of value. This is why efforts like EPRI’s DCFlex and similar initiatives matter. The best path forward is collaboration between large loads and energy suppliers.

Networks

The next generation network fabric must deliver very high bandwidth with ultra-low, tightly controlled tail latency, plus AI-specific congestion management that reacts in milliseconds and minimizes overcrowding (e.g. packet loss and resends on busy switches). AI compute traffic is starting to hit a network wall: fast chips are wasted if they can’t exchange data just as fast. Large training jobs synchronize gradients across thousands of GPUs and other accelerators, so job time is gated by the slowest packets, “stragglers” stall entire clusters. For example, tail latency, not the average, determines throughput, and industry engineers estimate 30% of training time can be lost to data movement. Next-gen fabrics must deliver massive bandwidth and guaranteed low tail latency, with AI-aware congestion control that responds in milliseconds to prevent packet loss and costly resends on congested switches.

What the datacenter also needs next in networks is an open, standardized interconnect that combines HPC-class performance with the ubiquity and tooling of good old Ethernet, ending proprietary lock-in while enabling multi-vendor sourcing. It must be energy-efficient and low-heat to fit within power and cooling budgets, and software-defined with deep telemetry so schedulers can avoid hotspots and keep GPUs busy. Finally, we need stronger site-to-site networking (metro/region DCI) so clusters can span locations, giving far more flexibility in where we place capacity without sacrificing training speed. This will also help soften some of the energy supply issues.

Streamlined and sustainable construction

Months per MW is our new key metric. To keep build times predictable and fast, we need a repeatable delivery system that de-risks labor scarcity and schedule volatility. We need elegant designs that are locked in and use factory-built, pre-certified assemblies with common modular interface points (power, cooling, controls) so inspections are predictable and changes are avoided. We also need strategic procurement (framework agreements, early buys, multi-sourcing) and truly collaborative delivery that rewards finishing early and captures field insights. The goal is to accelerate time from go signal to compute-online, cut carrying costs and change orders, diversify supply, and credibly lower embodied carbon by specifying biogenic construction materials where appropriate and sourcing from manufacturers running on clean energy.

Regulation must keep pace with the hardware curve. Grid and siting caps (e.g., regional large-load limits) collide with server roadmaps that are far more powerful, and hotter, each generation. What’s needed next: expand fast-track pathways and one-stop reviews for standardized designs; move to performance-based codes (thermal, water, refrigerants, acoustics) instead of prescriptive tech lists; allow fuel flexibility (renewable diesel, efficient gas, hydrogen) with clear compliance routes; streamline approvals for heat reuse and water recovery; and enable staged energization with onsite generation so compute can come online while grid upgrades finish. The business payoff is more viable sites, earlier revenue, lower risk premiums, and ESG gains without sacrificing speed.

Wrap up

The goal with this piece is to make sure we are looking around the corner to the next set of issues, even though we are all so busy attacking today’s issues. The future of datacenter design for the AI era demands a holistic approach that integrates next gen power and energy management, flexible cooling, high-performance open networking, and sustainable, repeatable construction practices. Chip to grid systems thinking. By prioritizing fungibility, interoperability, and efficiency across infrastructure layers, datacenter developers will minimize risk, maximize asset value, and stay ahead of escalating compute requirements. Ultimately, embracing these strategies positions datacenters to deliver scalable, resilient, and responsible AI compute capacity well into the future.